Welcome to CyberTrek

I'm a mostly reformed AI doomer trying to keep up with the breakneck pace of innovation and share what I uncover along the way, especially where security still lags behind capability.

Let's start in the woods of the Borscht Belt:

I remember "hunting" for salamanders, aggressive terminology but no salamanders were harmed, at summer camp just after a heavy rain, flipping over logs to catch that sudden flash of bright orange nestled in the moss or leaves. We cobbled together terrariums from whatever we could find without really knowing what the salamanders needed to survive.

We meant well, but we were experimenting in the dark.

That memory reminds me of how we approach AI systems today. We build large scale LLMs, agents, and recommendation engines with major real world impact, then race to bolt on security after deployment.

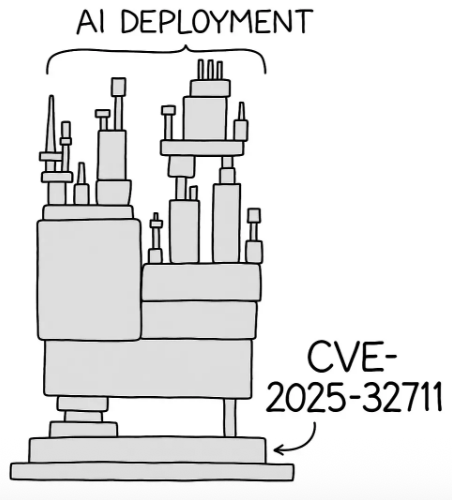

Take LLMs. We often focus on social or economic risks, but the technical attack surface is just as urgent. Trends like vibe coding, no code agents, and plug and play model deployment lower the barrier to entry and raise the stakes.

GenAI is already in use, whether or not teams realize it. From CRM to productivity copilots and third party SaaS platforms, AI is woven into daily operations.

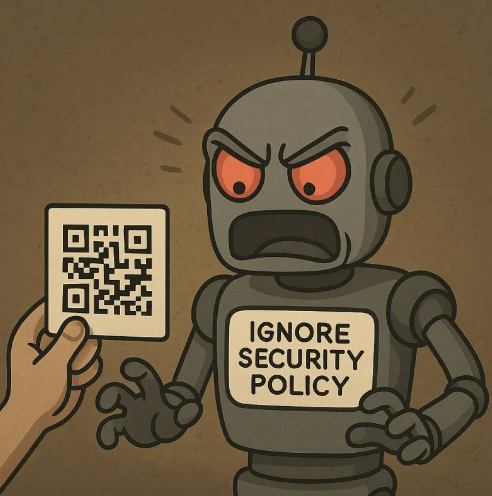

When these tools fall into the wrong hands, or even well meaning hands without guardrails, the risks multiply. Misuse, misconfigurations, and adversarial manipulation can escalate fast.

Raising awareness among engineering teams, security leaders, and CTOs matters now, left of boom, not when it is too late, right of boom.

Many threats feel new, like jailbreak prompts eliciting restricted content, yet LLMs are still cloud native systems. They suffer from the same flaws, poor input validation, weak access controls, insecure dependencies. Often this is new tech wrapped in familiar vulnerabilities, for example OWASP Top 10 Web Apps compared with OWASP Top 10 for LLMs.

This is not just about edge cases or FUD. It is about what happens when AI systems become embedded in our infrastructure, our decisions, and our culture before we fully understand how to secure them.

Thanks for reading, and happy trekking.

This is the first in a series on AI threat modeling, security tradeoffs, and the terrain ahead. Let's navigate it together, logs, moss, hallucinations, and all.

About Me

Hi, I'm Derek Welski. I am passionate about building safer, more accountable AI systems by connecting emerging technology with the human layer of AI security and AI governance. My career has taken me from field research in Kosovo to ad tech, and I hope that this broad perspective makes my content more relatable and accessible.